August 14, 2025

4 min learn

New Mind System Is First to Learn Out Inside Speech

A brand new mind prosthesis can learn out internal ideas in actual time, serving to individuals with ALS and mind stem stroke talk quick and comfortably

Andrzej Wojcicki/Science Photograph Library/Getty Pictures

After a mind stem stroke left him virtually solely paralyzed within the Nineteen Nineties, French journalist Jean-Dominique Bauby wrote a ebook about his experiences—letter by letter, blinking his left eye in response to a helper who repeatedly recited the alphabet. Right now individuals with related circumstances typically have way more communication choices. Some units, for instance, observe eye actions or different small muscle twitches to let customers choose phrases from a display.

And on the slicing fringe of this subject, neuroscientists have extra not too long ago developed mind implants that may flip neural alerts straight into entire phrases. These brain-computer interfaces (BCIs) largely require customers to bodily try to talk, nevertheless—and that may be a gradual and tiring course of. However now a brand new growth in neural prosthetics modifications that, permitting customers to speak by merely considering what they need to say.

The brand new system depends on a lot of the identical know-how because the extra widespread “tried speech” units. Each use sensors implanted in part of the mind known as the motor cortex, which sends movement instructions to the vocal tract. The mind activation detected by these sensors is then fed right into a machine-learning mannequin to interpret which mind alerts correspond to which sounds for a person person. It then makes use of these knowledge to foretell which phrase the person is making an attempt to say.

On supporting science journalism

If you happen to’re having fun with this text, think about supporting our award-winning journalism by subscribing. By buying a subscription you might be serving to to make sure the way forward for impactful tales concerning the discoveries and concepts shaping our world in the present day.

However the motor cortex doesn’t solely mild up after we try to talk; it’s additionally concerned, to a lesser extent, in imagined speech. The researchers took benefit of this to develop their “internal speech” decoding gadget and printed the outcomes on Thursday in Cell. The staff studied three individuals with amyotrophic lateral sclerosis (ALS) and one with a mind stem stroke, all of whom had beforehand had the sensors implanted. Utilizing this new “internal speech” system, the contributors wanted solely to suppose a sentence they needed to say and it could seem on a display in actual time. Whereas earlier internal speech decoders had been restricted to solely a handful of phrases, the brand new gadget allowed contributors to attract from a dictionary of 125,000 phrases.

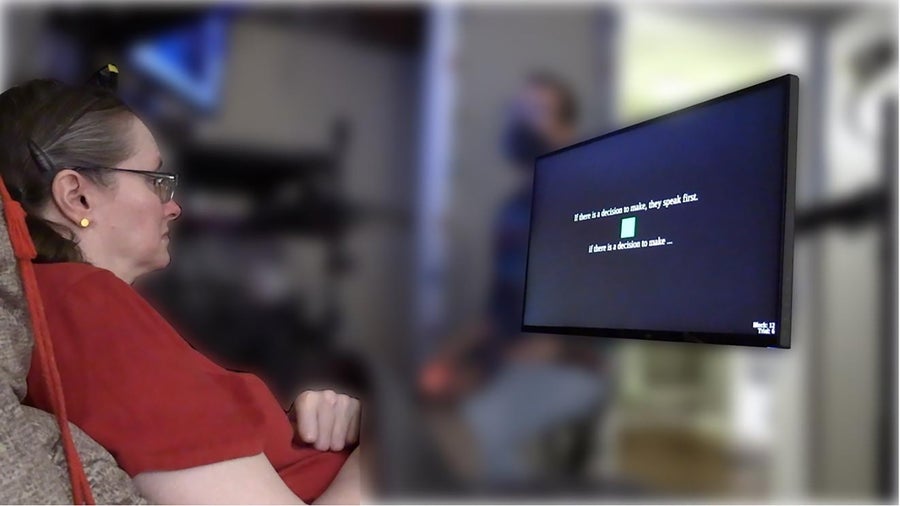

A participant is utilizing the internal speech neuroprosthesis. The textual content above is the cued sentence, and the textual content beneath is what’s being decoded in real-time as she imagines talking the sentence.

“As researchers, our purpose is to discover a system that’s comfy [for the user] and ideally reaches a naturalistic capability,” says lead creator Erin Kunz, a postdoctoral researcher who’s growing neural prostheses at Stanford College. Earlier analysis discovered that “bodily making an attempt to talk was tiring and that there have been inherent pace limitations with it, too,” she says. Tried speech units such because the one used within the research require customers to inhale as if they’re truly saying the phrases. However due to impaired respiratory, many customers want a number of breaths to finish a single phrase with that technique. Trying to talk can even produce distracting noises and facial expressions that customers discover undesirable. With the brand new know-how, the research’s contributors might talk at a snug conversational price of about 120 to 150 phrases per minute, with no extra effort than it took to think about what they needed to say.

Like most BCIs that translate mind activation into speech, the brand new know-how solely works if persons are in a position to convert the final thought of what they need to say right into a plan for easy methods to say it. Alexander Huth, who researches BCIs on the College of California, Berkeley, and wasn’t concerned within the new research, explains that in typical speech, “you begin with an thought of what you need to say. That concept will get translated right into a plan for easy methods to transfer your [vocal] articulators. That plan will get despatched to the precise muscle groups, after which they carry it out.” However in lots of instances, individuals with impaired speech aren’t in a position to full that first step. “This know-how solely works in instances the place the ‘thought to plan’ half is useful however the ‘plan to motion’ half is damaged”—a group of circumstances known as dysarthria—Huth says.

Based on Kunz, the 4 analysis contributors are keen concerning the new know-how. “Largely, [there was] loads of pleasure about doubtlessly with the ability to talk quick once more,” she says—including that one participant was notably thrilled by his newfound potential to interrupt a dialog—one thing he couldn’t do with the slower tempo of an tried speech gadget.

To make sure non-public ideas remained non-public, the researchers applied a code phrase: “chitty chitty bang bang.” When internally spoken by contributors, this could immediate the BCI to begin or cease transcribing.

Mind-reading implants inevitably elevate considerations about psychological privateness. For now, Huth isn’t involved concerning the know-how being misused or developed recklessly, talking to the integrity of the analysis teams concerned in neural prosthetics analysis. “I believe they’re doing nice work; they’re led by docs; they’re very patient-focused. A whole lot of what they do is de facto making an attempt to unravel issues for the sufferers,” he says, “even when these issues aren’t essentially issues that we would consider,” similar to with the ability to interrupt a dialog or “making a voice that sounds extra like them.”

For Kunz, this analysis is especially near house. “My father truly had ALS and misplaced the flexibility to talk,” she says, including that for this reason she bought into her subject of analysis. “I type of turned his personal private speech translator towards the top of his life since I used to be type of the one one that might perceive him. That’s why I personally know the significance and the impression this form of analysis can have.”

The contribution and willingness of the analysis contributors are essential in research like this, Kunz notes. “The contributors that we’ve got are really unimaginable people who volunteered to be within the research not essentially to get a profit to themselves however to assist develop this know-how for individuals with paralysis down the road. And I believe that they deserve all of the credit score on the planet for that.”