[ad_1]

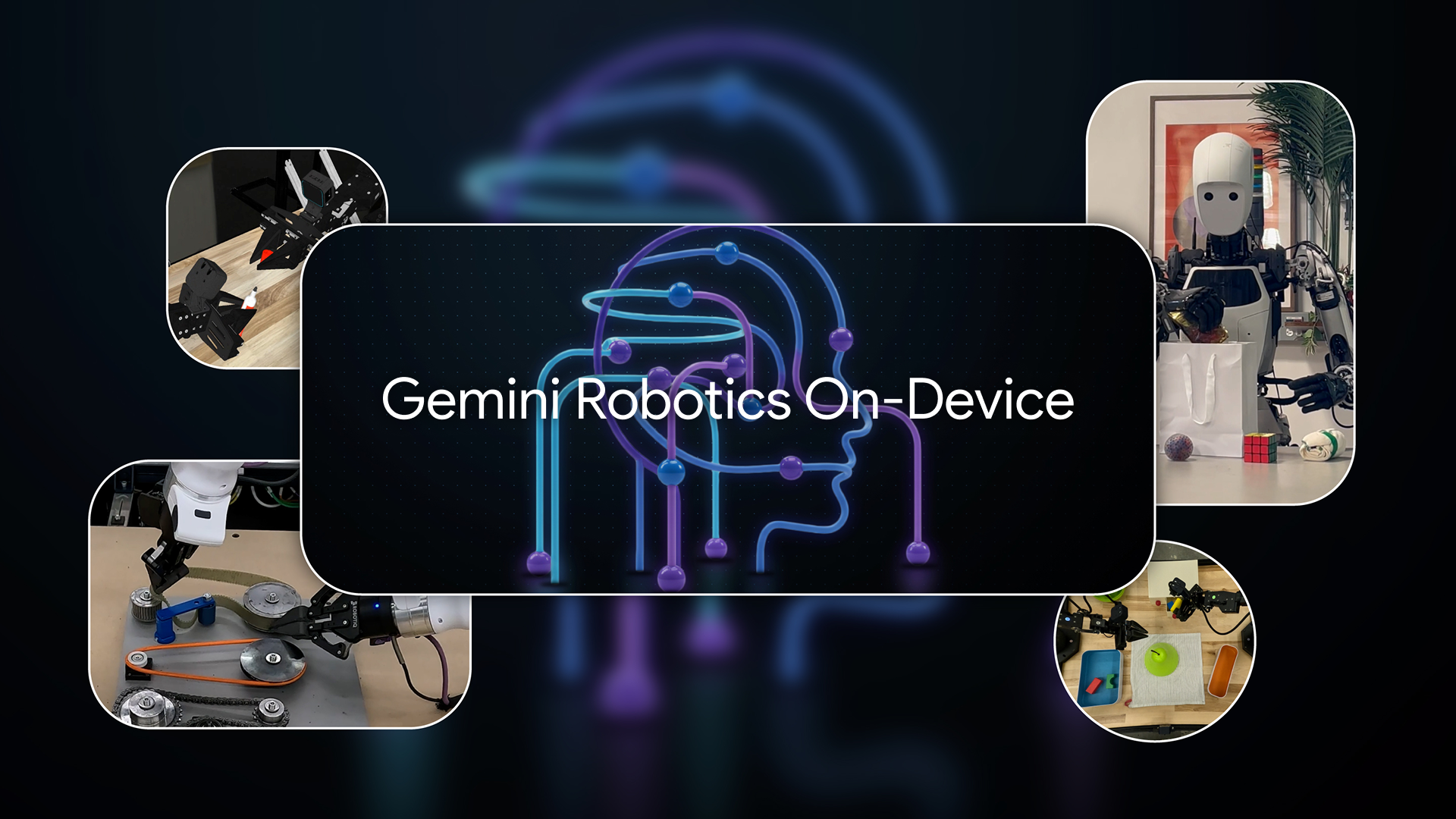

- Google’s new Gemini Robotics On‑Machine AI mannequin lets robots run totally offline

- The mannequin can study new duties from simply 50 to 100 examples

- It adapts to totally different robotic varieties, like humanoids or industrial arms, and may very well be utilized in rural properties and hospitals

For years, we have been promised robotic butlers able to folding your laundry, chopping your onions, and essaying a witty bon mot like those in our favourite interval dramas. One factor these guarantees by no means point out is that by accident unplugging your router would possibly shut down that mechanical Jeeves. Google claims its latest Gemini AI mannequin solves that downside, although.

Google DeepMind has unveiled its new Gemini Robotics On‑Machine AI mannequin as a method of retaining robots protected from downed energy traces and dealing in rural areas. Though it is not as highly effective as the usual cloud-based Gemini fashions, its independence means it may very well be much more dependable and helpful.

The breakthrough is that the AI, a VLA (imaginative and prescient, language, motion) mannequin, can go searching, perceive what it’s seeing, interpret pure language directions, after which act on them while not having to lookup any phrases or duties on-line. In testing, robots with the mannequin put in accomplished duties on unfamiliar objects and in new environments with out Googling it.

That may not appear to be an enormous deal, however the world is filled with locations with restricted web or no entry in any respect. Robots working in rural hospitals, catastrophe zones, and underground tunnels can’t afford to lag. Now, not solely is the mannequin quick, however Google claims it has an incredible capability to study and adapt. The builders declare they’ll educate the AI new tips with as few as 50 demonstrations, which is virtually instantaneous in comparison with a few of the applications at present used for robotic coaching.

Offline robotic AI

That capability to study and adapt can be evident within the robotic’s versatile bodily design. The mannequin was first designed to run Google’s personal dual-arm ALOHA gadgets, however has since confirmed able to working when put in in much more complicated machines, just like the Apollo humanoid robotic from Apptronik.

The concept of machines that study rapidly and act independently clearly raises some purple flags. However Google insists it is being cautious. The mannequin comes with built-in safeguards, each in its bodily design and within the duties it should perform.

You’ll be able to’t run out and purchase a robotic with this mannequin put in but, however a future involving a robotic with this mannequin or one among its descendants is simple to image. Let’s say you purchase a robotic assistant in 5 years. You need it to do regular issues: fold towels, prep meals, maintain your toddler from launching Lego bricks down the steps. However your different little one needed to see how that field with the blinking lights labored, and all of the sudden these lights stopped blinking. Fortunately, the mannequin put in in your robotic can nonetheless see and perceive what these Lego bricks are and that you’re asking it to select them up and put them again of their bucket.

That’s the actual promise of Gemini Robotics On‑Machine. It’s not nearly bringing AI into the bodily world. It’s about making it stick round when the lights flicker. Your future robotic butler gained’t be a cloud-tethered legal responsibility. The robots are coming, and they’re really wi-fi. Hopefully, that is nonetheless factor.

You may also like

[ad_2]